Even the smallest software update can change how entire systems function. Running tests after each update, called regression tests, ensures that every element of our platform is still working properly. RebelMouse runs regression tests continuously to increase efficiency as our functionalities expand and new features are introduced.

For each update of a production branch, we automatically begin regression tests. After tests are completed, we generate and review reports, and if there are any unexpected changes, we create tickets to revert changes and fix issues.

Here's a rundown of the different kinds of regression tests we perform:

Entry Editor as a Microservice

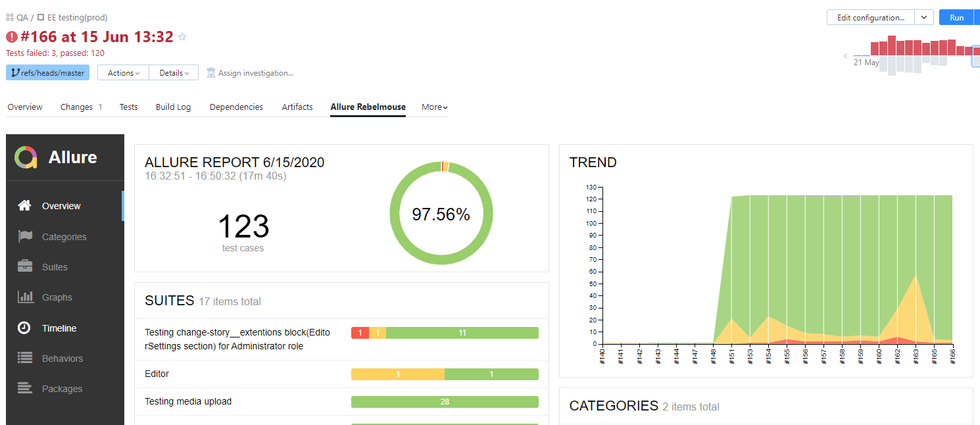

For our Entry Editor, regression tests check that all functions are working correctly. It's implemented through various users' content that covers about 95% of possible use cases.

Here's what a test sample test looks like:

AMP as a Microservice

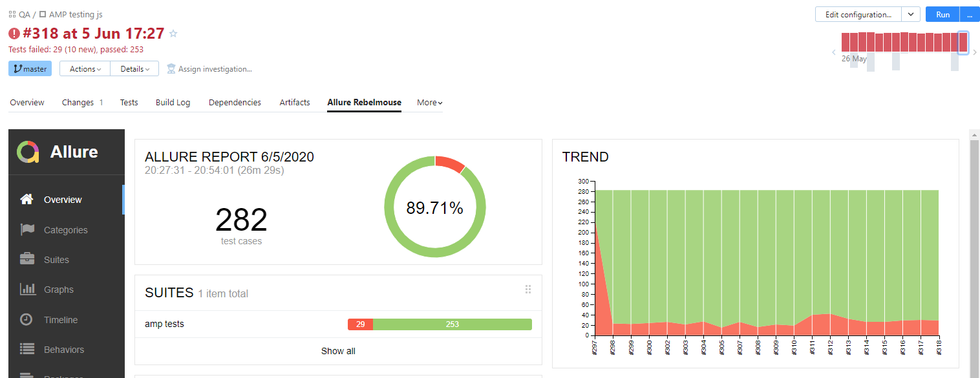

To check AMP functionality, we use a pixel-to-pixel comparison of the production version page view, and then release the candidate version. We also locate several interesting post examples on each of our clients' sites and check them for integrity before each update gets deployed to production.

Lighthouse

Page score results save TeamCity's Build Artifacts and ensure correct monitoring of speed increases or decreases in a persistent environment.

Dashboards

Full-fledged regression testing on our dashboards is still in progress. Currently, we have partially implemented tests on our Posts and Users dashboards, and our rendering engine sees 90% coverage of unit tests on public pages, with smoke tests done via TeamCity on each deployment.

If you have any questions about the way we perform our regression testing, email support@rebelmouse.com or talk to your account manager today.

Related Articles