Setting up a proper XML sitemap, a key component of technical SEO, is crucial for any website. It provides a roadmap to all of your content that you want to be accessible, highlighting to search crawlers which pages are the most important so they’re not missed.

It’s a good practice to set up multiple sitemaps, one for videos, one for news, and so on. You’ll list all of the sitemaps in your robots.txt file so they’re easy to find, like on Panorama.

A properly set up sitemap excludes posts that you don’t want indexed, like drafts, unlisted articles, or private content. When we set up your sitemaps at RebelMouse, we make sure all of this is done correctly so that there are no errors.

There are many aspects of XML sitemaps that are worth exploring. Let’s get started.

- Why Sitemaps Are Important

- How We Set Up Sitemaps at RebelMouse

- How to Submit a Sitemap to Google

- Sitemap Best Practices

- Troubleshooting Sitemap Issues in Google Search Console

Why Sitemaps Are Important

Google Search Central defines a sitemap as “a file where you provide information about the pages, videos, and other files on your site, and the relationships between them.” Quite simply, sitemaps tell search engine crawlers: These are the pages and files on our website that are most important to us. They ensure that your most relevant pages are discovered and indexed, potentially improving your site’s ranking in search engine results pages.

A sitemap helps crawlers discover new content and index it quickly, which is beneficial to websites that frequently publish new content or make edits to old content. Sitemaps are not limited to one type of content — they can incorporate photos, videos, and news articles, which helps search engines understand context and relevance of how content fits together.

One of the best use cases for sitemaps is that they help you identify crawling issues. For this reason, they should be reviewed regularly and any problems discovered should be addressed as soon as possible. By fixing outstanding issues, you ensure that your site is properly crawled and indexed.

Sitemaps are especially important for sites that fit any one of the following criteria:

- Your site is very large. This makes it more likely that search engine crawlers miss some of your new or recently updated pages without a good sitemap.

- You have a large archive of content pages that are isolated or not linked well to each other. You can list them in a sitemap to ensure they’re not overlooked.

- Your site is new and has few external links pointing at it. Web crawlers like Googlebot crawl the web by following links from one page to another. Without a sitemap, Google might not discover your pages due to a lack of inbound links.

- Your site uses rich media content, appears in Google News, or uses other sitemap-compatible annotations. Google can take additional information from sitemaps into account, where appropriate.

How We Set Up Sitemaps at RebelMouse

Your sitemaps are set up for you out of the box. Currently we support the following sitemaps on RebelMouse:

- /sitemap.xml (for published posts)

- /sitemap_news.xml (for posts published two days ago)

- /sitemap_video.xml (for published posts with video content in the lead media)

- /sitemap_pages.xml (for layouts)

- /sitemap_sections.xml (for public sections)

- /sitemap_custom_pages.xml (for intersection)

- /sitemap_section_content/section.xml (for a section — example)

- /sitemap_section_content/parent_section/child_section.xml (for a child section — example)

- /sitemap_tags.xml (for tags)

Regular sitemaps, news sitemaps, and video sitemaps do not include all posts. The following posts are excluded from sitemaps:

- Articles with an “Unpublished” status

- Removed posts

- Drafts

- Community posts

- Private posts

- A post is private if all sections assigned to it are set up as private

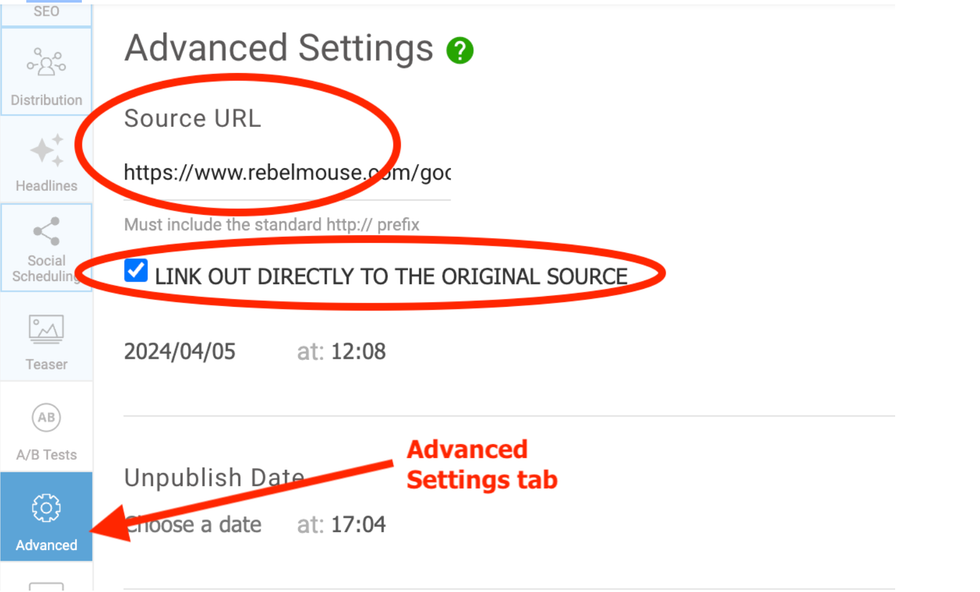

- Direct link outs

- These are posts with source URLs (i.e., original URLs) that link directly to an external site

- This is designated in the Advanced tab of Entry Editor where you’ll find “Source URL” and the ability to link out directly to the original source

- Posts specifically excluded from search results

- “Exclude from search engines” is an option in Entry Editor in our SEO tab

- Suspicious posts

How to Submit a Sitemap to Google

Submitting a sitemap to Google is extremely easy to do in Google Search Console. Simply follow these steps:

- Log in to Google Search Console for your website

- Navigate to Sitemaps on the left side under “Indexing”

- Add a new sitemap by providing the URL at the top and select “Submit”

- See if each sitemap is successfully submitted in the “Status” column

In some cases, you may want to remove a sitemap. Go to your sitemaps and select the sitemap that you’d like to remove. Click the three dots in the top right of the screen for that sitemap and select “Remove sitemap.”

Sitemap Best Practices

Here are some best practices to keep in mind as you set up your sitemaps:

- XML format: Use the XML file type, which is the preferred format for sitemaps and how we set up yours out of the box, since it’s specifically designed for search engines to understand the makeup of your site.

- Make sure your sitemap is UTF-8 encoded: This ensures every character, regardless of language or special symbols, is properly read by crawlers. Yours is set up like this out of the box.

- Create different sitemaps for different types of content (e.g., video, image, etc.).

- Properly size your sitemaps so that they are each below 50 MB or 50,000 URLs. This is covered for you out of the box on RebelMouse.

- Set up a sitemap index if you have more than one sitemap. We do this for you on RebelMouse.

- Submit your sitemaps to Google Search Console using these steps.

- Include your sitemaps in your robots.txt file.

- Update your sitemaps regularly. Keep your sitemaps dynamic as you make changes to your website. This helps search engines find new content and crawl properly. We do this for you out of the box on RebelMouse.

- Troubleshoot sitemap issues. Google Search Console indicates when there are issues. Make sure to address those to fully optimize your sitemaps. For more on this, check out the Troubleshooting Sitemap Issues section below.

Here are some more tips for setting up sitemaps properly:

- Include all important pages: Make sure that every page you want indexed is included in your sitemaps, like main content, category pages, etc.

- Include only 200 status code URLs: These are web pages or resources that can be successfully accessed. The “200 OK” code indicates that the request was successful and the correct resource is returned by the server to the user.

- Include only canonical URLs: You want original content to be indexed. If something is pointing somewhere else, exclude it.

- Don’t include URLs disallowed by your robots.txt: It is just confusing and a waste of space in your sitemaps.

- Don’t include URLs with a “no-index” tag: Again, this is just confusing and a waste to include.

Google News Sitemaps

RebelMouse supports Google News sitemaps, which align with Google’s specifications for how articles should be treated in a sitemap.

Google News sitemaps identify key criteria for each article, like genre, access tag, title, publication, and date. They allow Google to quickly determine what is a news article on your site and will publish that content to Google News. According to Google, it’s best practice for Google News sitemaps to only show a site’s posts from the previous 48 hours. Adhering to Google’s guidelines has led to great benefits for many sites powered by RebelMouse.

Sitemaps built for Google News often include valuable metadata, like when the page was last updated, how often the page has changed, and the importance of the page relative to other URLs on your site. They can also include images.

There are two main reasons to build a sitemap specifically for Google News. It will help Google discover news articles from your site faster, and make sure all relevant news articles are crawled and indexed.

Google News sitemaps for websites powered by RebelMouse typically follow this URL structure:

- domain.com/sitemap_news.xml

Here's an example of a news sitemap from RebelMouse client Raw Story.

Video Sitemaps

RebelMouse supports and encourages video sitemaps for every web property we power. We support video sitemaps for JW Player and YouTube out of the box.

According to Google, creating a video sitemap is a great way to help Google find and understand the video content on your site, especially videos that were recently added or might be difficult to find through the usual crawling process.

The default video sitemap for sites powered by RebelMouse typically appears at:

- domain.com/sitemap_video.xml

Here’s an example of a video sitemap from RebelMouse client GZERO Media.

For more information, check JW Player’s guide to building a video sitemap and Google’s official documentation on video sitemaps. Be sure to follow video SEO best practices to ensure your video content is best optimized for search.

Make Images Discoverable in Sitemaps

Sitemaps created by RebelMouse include images by default, and they have the appropriate markup so that they’re aligned with Google’s image SEO best practices.

You might consider creating a separate image sitemap if images are important to your website and you want to make sure they are indexed properly and not missed. Unlike regular sitemaps, you can list URLs from other domains under

Troubleshooting Sitemap Issues in Google Search Console

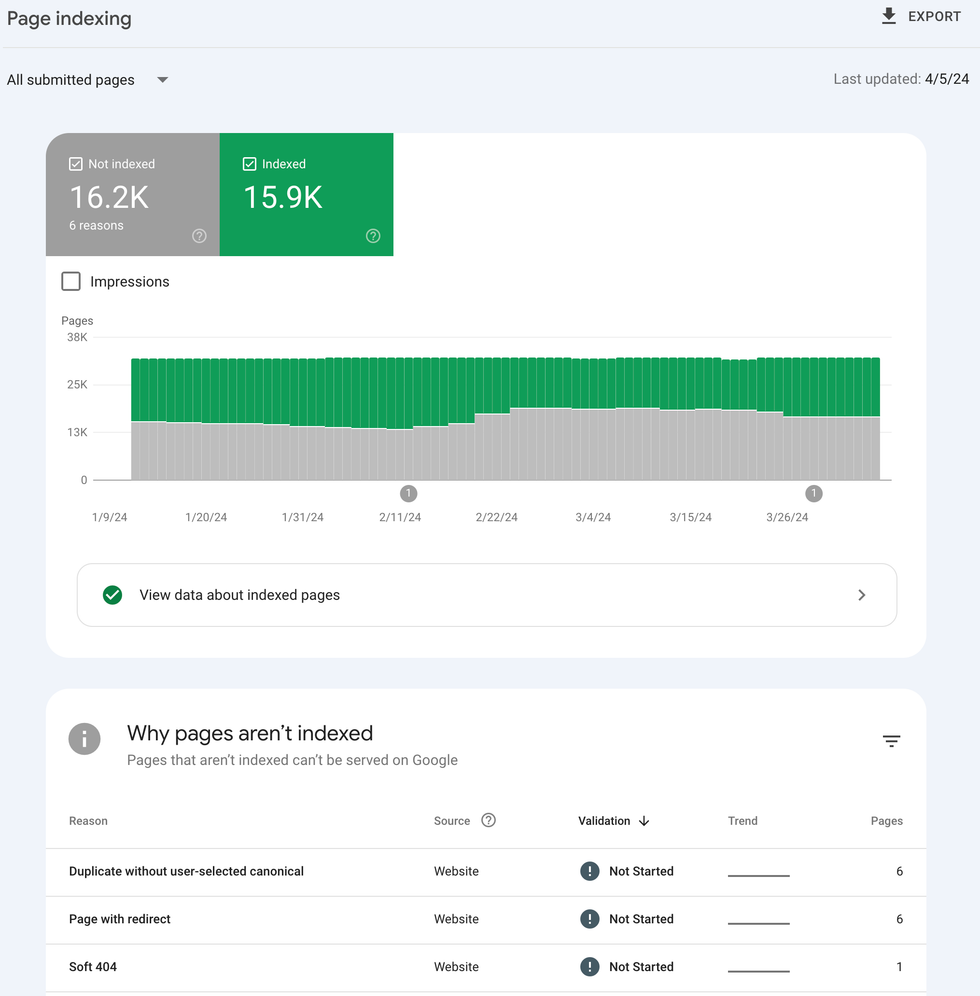

When there are problems with your sitemap, you can find those issues listed in Google Search Console. Navigate to “Indexing,” then “Pages,” and then toggle to “All Submitted Pages” at the top of the screen. You’ll see something like the following, including a list of issues for why pages aren’t being indexed.

Here are the issues you’ll see pop up, along with how to handle each, according to Google:

- Soft 404: A “not found” message was returned but not a 404. Soft 404s are problematic because they return a 200 (success) status code for users, but still lead to an error page. It’s better to delete a page, redirect to a working page, or optimize the page by adding content. Use the URL Inspection Tool to check the latest status of a URL.

- Blocked due to unauthorized request (401): The page was blocked because the user is “unauthorized.” Remove authorization requirements or otherwise verify Googlebot to allow it.

- Blocked due to access forbidden (403): The user agent was not granted access. If you still want Google to crawl it, verify Googlebot like above.

- Not found (404): The page was not found when requested, delivering a 404 code. Check why the page that is returning a 404 is in your sitemap and remove it from the sitemap to resolve this issue.

- URL blocked due to another 4xx issue: This means there’s another error, not one of the issues listed above. Use Google’s URL Inspection Tool to debug it.

- Crawled — currently not indexed: The URL has been crawled already but not indexed. It may be indexed later. No need to submit this URL for crawling.

- Discovered — currently not indexed: Google came across the page but didn’t crawl it yet. It will crawl it later or you can submit the URL in Google Search Console to request a crawl.

- Alternate page with proper canonical tag: This is an alternate form of another page (typically a mobile version of a desktop canonical, or a desktop version of a mobile canonical). See why this is in the sitemap, as you should only include canonical URLs, and remove it from the sitemap.

- Duplicate without user-selected canonical: The page is a duplicate but it doesn’t indicate the preferred canonical page. Google has chosen the other page as the canonical, so this one will not appear in Google Search. Google’s URL Inspection Tool allows you to see which page Google considers canonical.

- If Google chose the wrong URL as the canonical, you can address this by specifying canonical (rel=”canonical”) for the page.

- If you don’t believe it should be a duplicate, make meaningful changes so that the content is not considered a duplicate.

- Duplicate, Google chose different canonical than user: The page is marked canonical, but Google thinks another URL is the better canonical and indexed that instead. Inspect the URL to see what Google chose as the canonical.

- Page with redirect: This is a non-canonical URL that redirects to another page, so this URL will not be indexed. It should be removed from the sitemap. (Note: A canonical URL with a redirect can be indexed.)

Still have questions? Get in touch and we’ll be happy to have one of our strategists assist you with creating and maintaining your sitemaps.